Jiwon Park

Biography

Passionate student specializing in robot perception, with a talent for developing robotic system software. Dedicated to advancing research in robust perception techniques to enhance the reliability of outdoor mobile robots.

Recent graduate from Kyung Hee University with a dual major in Electronic Engineering and Software Convergence, Robot Vision Track(August 2024). Gained industry experience through internships at NAVER LABS and ROBROS.

- Traversability-aware Navigation

- Off-road Mobile Robot

- Robot Perception

M.S. in Robotics, 2025 - Present

KAIST

B.S. in Electronic Engineering, Software Convergence (Robot Vision Track), 2019 - 2024

Kyung Hee University

Projects

Featured Publications

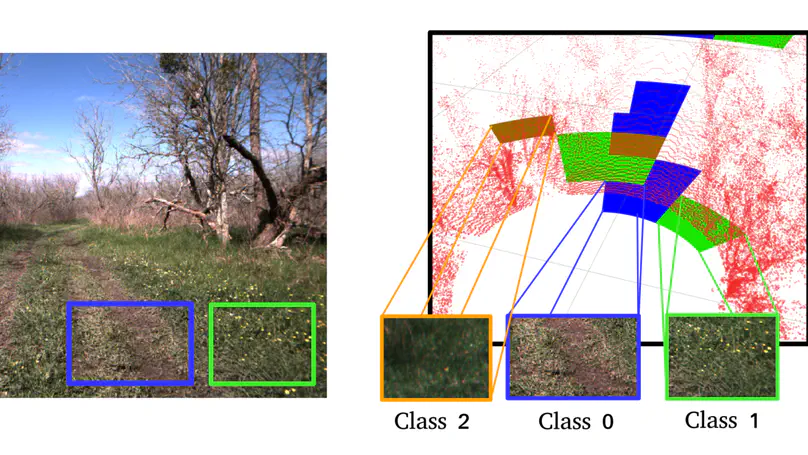

This research introduces an approach to enhancing autonomous mobile robot navigation in diverse outdoor environments. We developed a method that transforms 3D LiDAR point cloud data into grayscale heightmaps, enabling more accurate assessment of ground textures. Our system classifies terrains in both static and dynamic environments, incorporating IMU data to account for robot motion influenced by terrain. The method demonstrates superior performance in texture analysis compared to direct point cloud analysis techniques, significantly improving the ability of mobile robots to navigate safely and efficiently across various outdoor terrains. This advancement is crucial for protecting sensitive equipment and cargo while expanding the operational capabilities of autonomous robots in complex, real-world settings.

Publications

Experience

- Developed software of inference board for robot, enhancing on-board processing capabilities

- Implemented deep learning model optimization techniques for low-cost NPUs

- Integrated third-party robots into multi-robot control system (ARC)

- Developed monitoring applications for robot experiments

ROS1 / ROS2 / RKNN / gRPC / MQTT / CMake / Flutter

- Developed experimental environments for robotic arms using MuJoCo simulation platform

- Proposed a transformer-based model for detecting collisions in robotic arms without external sensors, relying on joint position and torque controller

ROS 1 / MuJoCo / PyTorch

- Conducted research on outdoor mobile visual SLAM (Simultaneous Localization and Mapping)

- Focused on enhancing accuracy and reliability of visual navigation for mobile robots

Mobile Robot / ROS / ORB-SLAM2

- Advisor : Professor Hyoseok Hwang